Scaling FTP with SFTPPlus MFT and Load Balancers

High availability and scalability are key for file transfer infrastructure. Whether you're dealing with partner data exchanges or automated system-to-system communication, your FTP services must be resilient and responsive.

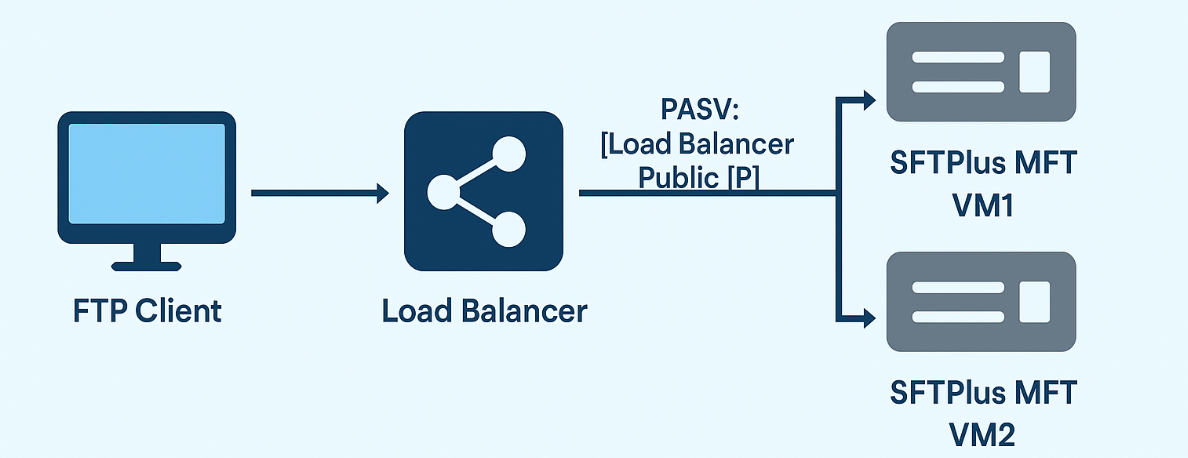

Using SFTPPlus MFT with a load balancer allows you to create a redundant and horizontally scalable FTP server setup across multiple virtual machines (VMs) or containers.

This post explains how SFTPPlus supports this deployment, including FTP passive mode handling and configuration synchronization.

Load Balancer Compatibility

SFTPPlus MFT works seamlessly with any standard load balancer on Windows, Linux or macOS, including:

- 🛡️ F5 BIG-IP

- 🛡️ HA-Proxy

- 🛡️ NetScaler

- 🛡️ Zscaler

- 🛡️ Traefik

- 🛡️ Fortinet

- ☁️ AWS Elastic Load Balancer (ELB)

- ☁️ Azure Load Balancer

- ☁️ Google Cloud Load Balancing

For FTP, you need to setup a TCP network load balancer. The application load balancer* are to be used only for HTTP based protocols and are not supported for the FTP or the SFTP protocol.

The load balancers distribute incoming FTP control connections, on port 21 or 990, across two or more backend VMs or containers, each running an independent instance of SFTPPlus MFT.

The load balancer will have separate forwarding rules for the FTP passive connections. The FTP passive port range is divided into segments, each segment allocated to a single VM or container.

Configuration and Deployment Architecture

SFTPPlus MFT is designed to be easily replicated across multiple nodes:

- ✅ Single-point configuration: The configuration is created or updated on a primary VM.

- 🔄 Auto-sync: The configuration is automatically synchronized to all other SFTPPlus MFT instances behind the load balancer.

- ⚙️ Each VM runs its own SFTPPlus MFT service but keeps a copy of the unified configuration.

- 🌪️ Even when the primary VM is not available, the remaining SFTPPlus MFT instances will continue to operate.

- ↩️ Each member of the load balancer can be restarted at any time, including the primary instance. The remaining instances will continue to operate.

This enables a consistent operational behavior across the entire FTP cluster.

Check the cluster documentation pages for a detailed description of all the available features.

Passive Mode and IP Advertisement

One common challenge in FTP deployments behind load balancers is how the PASV (Passive Mode) command advertises the server’s IP to the client.

SFTPPlus MFT solves this by advertising the public IP of the load balancer, not the internal VM IP. This is a critical detail that prevents FTP clients from trying to connect to a non-routable address.

This feature ensures smooth operation with FTP clients like:

- WinSCP

- FileZilla

- curl

- Embedded systems or scripts

Passive Ports

Passive mode FTP requires opening additional ephemeral data ports after the control connection is established. These ports will be processed via the load balancer to ensure the same domain name (FQDN) or IP address is used by both the control port and passive ports.

SFTPPlus MFT handles this by:

- 📦 Dividing the passive port range between each VM behind the load balancer.

- 🔒 Ensuring the new passive connections will be forwarded to the same VM that generate the response to the PASV or EPSV commands.

- 🍯 without the need of sticky session or source base IP forwarding rules.

This split-range design simplifies firewall rules and work even with the most basic load balancers.

On the load balance each passive port range will forward to a single VM. The load balancer health check for the passive ports is configured to use the control connection port of the VM associated with this range. The passive ports are ephemeral, and only active for a short period of time during the initial passive connection handshake. This is why you can't monitor the passive ports via the load balancer health checks.

The initial control connection from the client is made via:

- 🔌 Port 21 (FTP)

- 🔐 Port 990 (FTPS)

The load balancer handles routing this control connection to any available SFTPPlus MFT instance. After that, the client is directed (via PASV or EPSV) to a specific passive port in the range owned by the responding VM.

Check the full configuration options available in SFTPPlus MFT for an FTP or FTPS server.

🎯 Benefits of This Architecture:

- 📈 Scalability: Add more VMs as your FTP traffic grows.

- 🔁 High Availability: If one VM fails, others remain operational.

- 🧩 No special client-side configuration required - works with any standard FTP clients, including legacy clients that only support PASV and don't have support for ESPV.

Advanced file processing

Once a file is uploaded or downloaded via FTP, SFTPPlus MFT can trigger advanced file processing and routing rules.

The same rules are shared with the SFTP or the HTTPS/AS2 transfer. Check this article about SFTP load balancer to read more about SFTPPlus MFT advanced managed file processing capabilities.

Conclusion

Deploying SFTPPlus MFT behind a load balancer creates a robust, scalable, and secure FTP service that supports enterprise-grade requirements. From automatic configuration sync to smart passive port management, this architecture supports both performance and simplicity.

Whether you're hosting in AWS, Azure, Google Cloud, or on-premises, SFTPPlus MFT offers the flexibility and protocol control needed for a modern FTP infrastructure.

👉 Want to see a live demo or learn how to configure SFTPPlus MFT for your load balancer? Contact our dedicated SFTPPlus support team.